blink

Huh

.

The fast lady’s song

It made public news that Opera is sidelining the Presto engine for Webkit, something I expected before I knew.

What is good for business and what is good for the Web are not the same. Opera’s business case for Presto has not been so strong. Most of the development effort had the least perceptible effect for the users (and the profit), and what has had the most perceptible effect has taken the least effort.

(more…)Slogan Ready

So the Bluetooth Special Interest Group goes for the “Smart” moniker to market multi-technology Bluetooth 4.0. Fine, as it were, though it reminds me of the e-moniker during the Internet bubble and the rest. It is common for consumer devices these days to get Smart, and this is the market they are aiming for.

However, as Engadget succinctly headlines, Bluetooth SIG unveils Smart Marks, explains v4.0 compatibility with unnecessary complexity. You don’t just have Smart, you have Smart Ready for devices that are ready to smart. “Ready” implies “buy more expensive now, get happy later”. If you buy a Ready device it will be able to work with The Shiny New Thing on the near horizon.

(more…)HTML Video: Transcription

Reply to How to include extended transcripts with video

This is not written from WAI perspective, but also from the perspective of making video/audio WAI usable.

Before jumping into syntax at the end I would like to consider the requirements for captions and transcriptions. Here are four of mine, in part based on an earlier post I have made.

1. IT HAS TO BE COLLABORATIVE

Look at the process where transcripts are made. Take the TED.com videos as an example, which I think is more useful than e.g. YouTube.

0. Binary video

Step 1: Captioning

1a. autocaption (Speech Recognition)

1c. contributors turn into regular language, add value

1e. editors takes the contributions and fix them, add quality

Step 2: Caption translation

2a. autotranslation

2c. contributors translate into target language and script, add value

2e. editors takes the contributions and fix them, add quality

Step 3: Encoding context

3a. Automatic metadata

3c. contributors correct and tag the video, add value

3e. editors takes the contributions and fix them, add quality

Step 2 is dependent on step 1, while step 3 is semi-independent and can be shunted off to some metadata discussion. Some of it is relevant, though. TED talks are talks made by a single speaker, but in interviews or discussions there may be multiple voices.

What I am aiming at however is that in practice these steps are made by different people, often at different times and places. The infrastructure has to allow for that. The one making the video may not be the ones to make the captions or transcriptions, who may not be the ones to translate them into readable Mandarin Chinese.

2. IT HAS TO HAVE SENSIBLE FALLBACKS

In the absence of collaboration we have to rely on automatic captioning and translations, provided by the web site and/or the user agent. Having both will likely end up with double dutch with today’s technology, but it is still better than nothing. The transcription from that Urdu video may not be easily understandable but at least we might get an inkling what it is about and whether we should spend effort getting a better translation. A caption made by a human should be vastly better, though in real cases it is not unlikely that a caption is neither made by a human nor better than what a UA can provide.

(more…)Web Animations, towards a Web 2.1

SVG Animation (based on SMIL) and CSS Animation offer some similar features for animating Web content. Harmonising these two technologies has been considered on a number of occasions but a path forward has yet to be established. In response to a feature-by-feature comparison of the two technologies the question was raised, “What are the animation features required, prioritised by use case?” (Meeting minutes which lead to Action-48) although a closely related question was, “What would it take to make CSS Animations achieve feature parity with SVG Animations?”

From face-to-face meeting on Web Animations, as discussed here. There is now a CSS-SVG Effects Task Force activity on this.

It has taken time, but by now CSS and SVG are on the same team, which is kind of a precursor to make the specifications well-integrated as well.

The day I lost my trust in Gmail…

…was the day (today) when I got the message on yellow background that “your draft has been discarded”. Exactly what led to this event I don’t know, Gmail didn’t tell, was it a keypress in the wrong area of the screen, or a connectivity glitch, or a Google employee deciding that “yes, I do want to be evil”, I simply do not know.

(more…)What is wrong with CSS

A blog entry titled “what is right with CSS” would be far longer, CSS may remain my favourite W3C specs, but on retrospect there are a number of things that haven’t worked out and should have.

(more…)Dante

Surely the third round of the ninth circle of hell would be filled up with the designers and programmers of Chinese Internet banking sites.

CSS 2.1 Solid Soon?

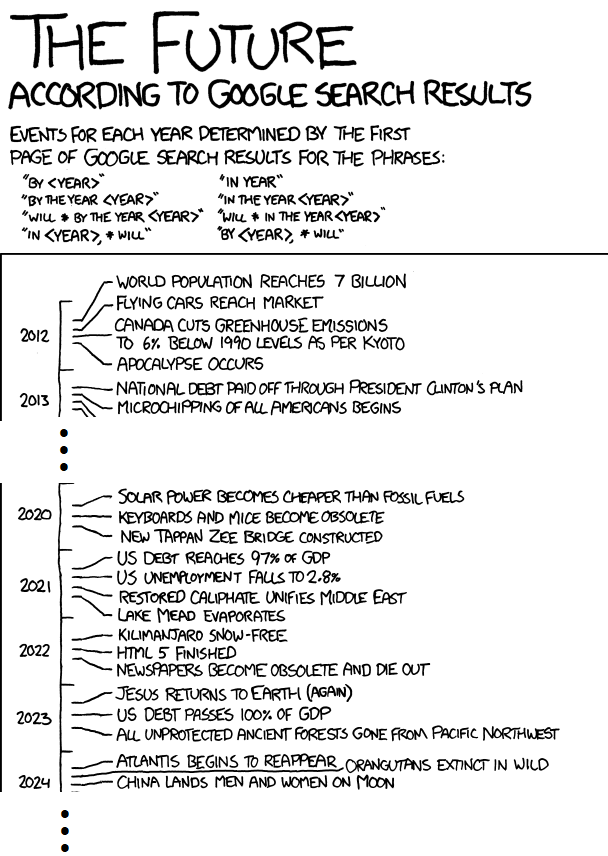

XKCD recently published the future and found through a good number of Google searches that finishing up HTML5 would herald the demise of newspapers and the Third Coming of Christ.

XKCD recently published the future and found through a good number of Google searches that finishing up HTML5 would herald the demise of newspapers and the Third Coming of Christ.

Coincidentally the W3C declared that finally CSS 2.1 had reached Proposed Recommendation status, and surely, surely!, the end, in the form of CSS 2.1 as Recommendation, should be nigh [Note from the future: it became one later same year]. I recapped the story in an almost four year earlier entry, Cruelly Slow Slog, a slog that cruelly continued for four more years. 11 years of labour is not bad for what was intended as a quick fix.

(more…)